Know Your Agent. Know Your Audience. Kill Your Anxiety.

AI agents represent your brand 24/7—but how do you know they're performing? KYA Lab gives you real-world visibility so you can trust, improve, and scale.

The Problem We Solve

AI agents are everywhere—voice, chat, workflows—but evaluation is still stuck in demo mode.

- You don't know how they're performing in real conversations

- They might be off-brand, off-policy, or off-track—without you knowing

- You're flying blind on quality, tone, safety, and effectiveness

What KYA Lab Does

Evaluate your AI agents—clearly, continuously, confidently.

- Task success: Did the agent complete the action?

- User experience: Fast, clear, helpful

- Brand alignment: Tone, policy, escalation paths

- Failure patterns: Hallucinations, loops, inconsistencies

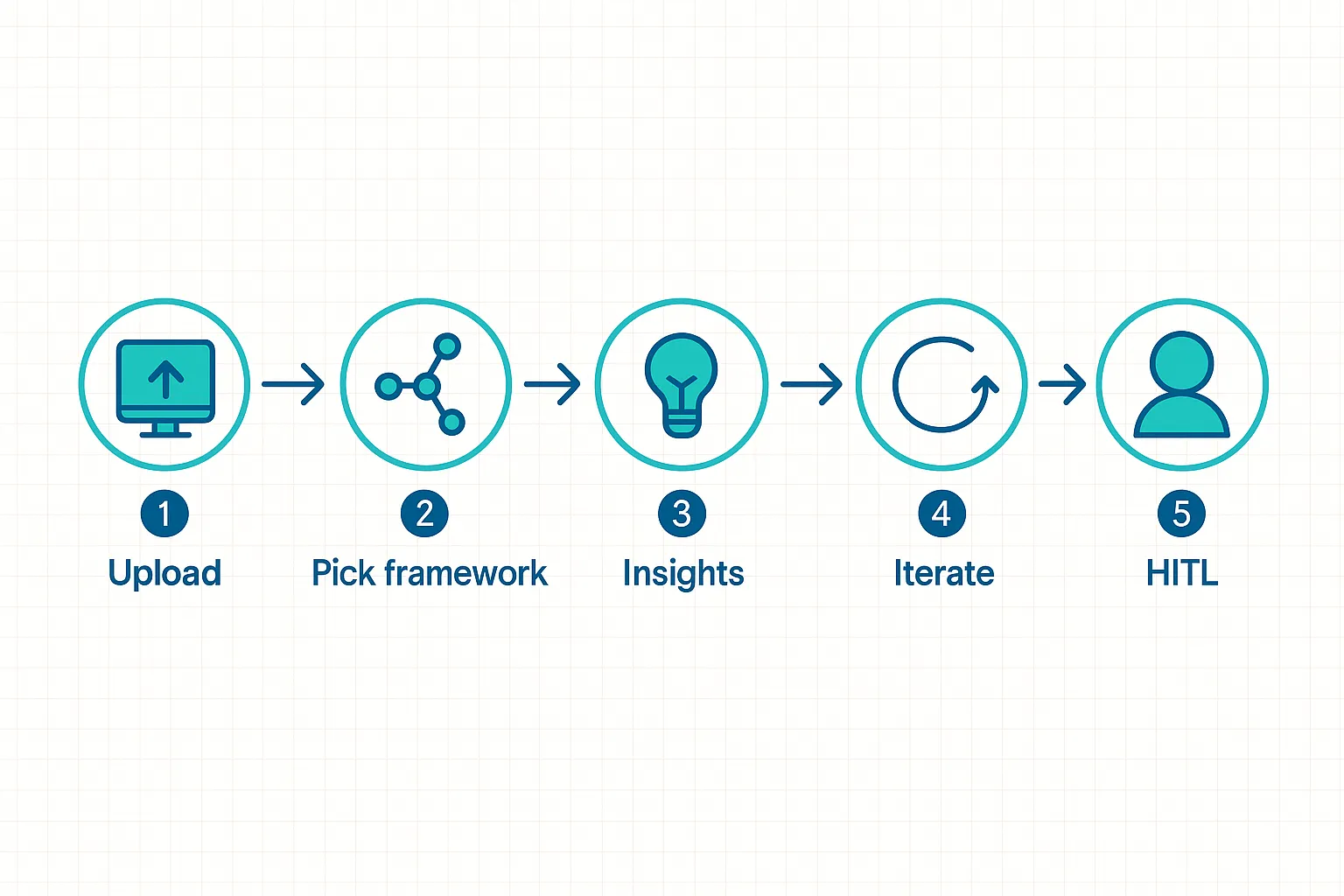

How It Works

- Upload or connect your agent data (voice, chat, task logs)

- Pick an evaluation framework (CX quality, model safety, domain QA)

- Receive structured insights and performance scores

- Iterate with scorecards to improve and align models

- Optional: human-in-the-loop tools for deeper QA & RL

Who It's For

- CX & Ops teams running customer-facing agents

- AI teams fine-tuning LLMs and scoring outputs

- Legal, finance & healthcare teams needing auditability

What You Get

Standardized Frameworks

Evaluation frameworks tailored to your specific needs

Real-time Scorecards

Visibility and insights for continuous improvement

Scalable Workflows

Human + AI workflows that scale with your needs

Peace of Mind

Confidence to launch and grow AI with solid evaluation